Thursday, August 29, 2013

Wednesday, August 28, 2013

Tuesday, August 27, 2013

Sunday, August 25, 2013

Friday, August 23, 2013

Thursday, August 22, 2013

Wednesday, August 21, 2013

Tuesday, August 20, 2013

Sunday, August 18, 2013

Saturday, August 17, 2013

Wednesday, August 14, 2013

Tuesday, August 13, 2013

Monday, August 12, 2013

Peer-to-peer (p2p) networks

A peer-to-peer (P2P) network is a type of decentralized and distributed network architecture in which individual nodes in the network (called "peers") act as both suppliers and consumers of resources, in contrast to the centralized client–server model where client nodes request access to resources provided by central servers.

In a peer-to-peer network, tasks (such as searching for files or streaming audio/video) are shared amongst multiple interconnected peers who each make a portion of their resources (such as processing power, disk storage or network bandwidth) directly available to other network participants, without the need for centralized coordination by servers

A peer-to-peer (P2P) network in which interconnected nodes ("peers") share resources amongst each other without the use of a centralized administrative system

A peer-to-peer (P2P) network in which interconnected nodes ("peers") share resources amongst each other without the use of a centralized administrative systemArchitecture of peer-to-peer systems

A peer-to-peer network is designed around the notion of equal peer nodes simultaneously functioning as both "clients" and "servers" to the other nodes on the network. This model of network arrangement differs from the client–server model where communication is usually to and from a central server. A typical example of a file transfer that uses the client-server model is the File Transfer Protocol (FTP) service in which the client and server programs are distinct: the clients initiate the transfer, and the servers satisfy these requests A network based on the client-server model, where individual clientsrequest services and resources from centralized servers

A network based on the client-server model, where individual clientsrequest services and resources from centralized servers

A network based on the client-server model, where individual clientsrequest services and resources from centralized servers

A network based on the client-server model, where individual clientsrequest services and resources from centralized servers

.

Routing and resource discovery

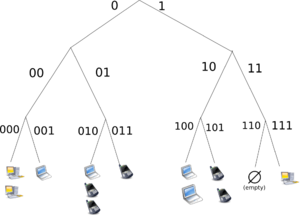

Peer-to-peer networks generally implement some form of virtual overlay network on top of the physical network topology, where the nodes in the overlay form a subset of the nodes in the physical network. Data is still exchanged directly over the underlying TCP/IP network, but at the application layer peers are able to communicate with each other directly, via the logical overlay links (each of which corresponds to a path through the underlying physical network). Overlays are used for indexing and peer discovery, and make the P2P system independent from the physical network topology. Based on how the nodes are linked to each other within the overlay network, and how resources are indexed and located, we can classify networks as unstructured or structured (or as a hybrid between the two)

Unstructured networks

Unstructured peer-to-peer networks do not impose a particular structure on the overlay network by design, but rather are formed by nodes that randomly form connections to each other. (Gnutella, Gossip, and Kazaa are examples of unstructured P2P protocols)

Because there is no structure globally imposed upon them, unstructured networks are easy to build and allow for localized optimizations to different regions of the overlay. Also, because the role of all peers in the network is the same, unstructured networks are highly robust in the face of high rates of "churn" -- that is, when large numbers of peers are frequently joining and leaving the network.

However the primary limitations of unstructured networks also arise from this lack of structure. In particular, when a peer wants to find a desired piece of data in the network, the search query must be flooded through the network to find as many peers as possible that share the data. Flooding causes a very high amount of signaling traffic in the network, uses more CPU/memory (by requiring every peer to process all search queries), and does not ensure that search queries will always be resolved. Furthermore, since there is no correlationbetween a peer and the content managed by it, there is no guarantee that flooding will find a peer that has the desired data. Popular content is likely to be available at several peers and any peer searching for it is likely to find the same thing. But if a peer is looking for rare data shared by only a few other peers, then it is highly unlikely that search will be successful

Overlay network diagram for an unstructured P2P network, illustrating the ad hoc nature of the connections between nodes

Overlay network diagram for an unstructured P2P network, illustrating the ad hoc nature of the connections between nodes

Structured  networks

networks

In structured peer-to-peer networks the overlay is organized into a specific topology, and the protocol ensures that any node can efficiently search the network for a file/resource, even if the resource is extremely rare.

The most common type of structured P2P networks implement a distributed hash table (DHT) in which a variant of consistent hashing is used to assign ownership of each file to a particular peer. This enable peers to search for resources on the network using a hash table: that is, (key, value) pairs are stored in the DHT, and any participating node can efficiently retrieve the value associated with a given key.

However, in order to route traffic efficiently through the network, nodes in a structured overlay must maintain lists of neighbors that satisfy specific criteria. This makes them less robust in networks with a high rate of churn (i.e. with large numbers of nodes frequently joining and leaving the network). More recent evaluation of P2P resource discovery solutions under real workloads have pointed out several issues in DHT-based solutions such as high cost of advertising/discovering resources and static and dynamic load imbalance.

Notable distributed networks that use DHTs include BitTorrent's distributed tracker, theKad network, the Storm botnet, YaCy, and the Coral Content Distribution Network. Some prominent research projects include the Chord project, Kademlia, PAST storage utility, P-Grid, a self-organized and emerging overlay network, and CoopNet content distribution system. DHT-based networks have also been widely utilized for accomplishing efficient resource discovery for grid computing systems, as it aids in resource management and scheduling of applications

Hybrid models

Hybrid models are a combination of peer-to-peer and client-server models A common hybrid model is to have a central server that helps peers find each other. Spotify is an example of a hybrid model

Security and trust

Peer-to-peer systems pose unique challenges from a computer security perspective.

Like any other form of software, P2P applications can contain vulnerabilities. What makes this particularly dangerous for P2P software, however, is that peer-to-peer applications act as servers as well as clients, meaning that they can be more vulnerable to remote exploits.

Routing attacks

Also, since each node plays a role in routing traffic through the network, malicious users can perform a variety of "routing attacks", or denial of service attacks. Examples of common routing attacks include "incorrect lookup routing" whereby malicious nodes deliberately forward requests incorrectly or return false results, "incorrect routing updates" where malicious nodes corrupt the routing tables of neighboring nodes by sending them false information, and "incorrect routing network partition" where when new nodes are joining they bootstrap via a malicious node, which places the new node in a partition of the network that is populated by other malicious nodes

Corrupted data and malware

The prevalence of malware varies between different peer-to-peer protocols. Studies analyzing the spread of malware on P2P networks found, for example, that 63% of the answered download requests on the Limewire network contained some form of malware, whereas only 3% of the content on OpenFT contained malware. In both cases, the top three most common types of malware accounted for the large majority of cases (99% in Limewire, and 65% in OpenFT). Another study analyzing traffic on the Kazaa network found that 15% of the 500,000 file sample taken were infected by one or more of the 365 different computer viruses that were tested for.

Corrupted data can also be distributed on P2P networks by modifying files that are already being shared on the network. For example, on the FastTrack network, the RIAA managed to introduce faked chunks into downloads and downloaded files (mostly MP3 files). Files infected with the RIAA virus were unusable afterwards and contained malicious code. The RIAA is also known to have uploaded fake music and movies to P2P networks in order to deter illegal file sharing. Consequently, the P2P networks of today have seen an enormous increase of their security and file verification mechanisms. Modern hashing, chunk verification and different encryption methods have made most networks resistant to almost any type of attack, even when major parts of the respective network have been replaced by faked or nonfunctional hosts

Privacy/anonymity

Some peer-to-peer networks (e.g. Freenet) place a heavy emphasis on privacy and anonymity -- that is, ensuring that the contents of communications are hidden from eavesdroppers, and that the identities/locations of the participants are concealed. Public key cryptography can be used to provide encryption, data validation, authorization, and authentication for data/messages. Onion routing and other mix network protocols (e.g. Tarzan) can be used to provide anonymity

Incentivizing resource sharing and cooperation

Cooperation is at the foundation of P2P systems, which only reach their full potential when large numbers of nodes contribute resources. But in current practice P2P networks often contain large numbers of users who utilize resources shared by other nodes, but who do not share anything themselves (often referred to as the "freeloader problem"). Thus a variety of incentive mechanisms have been implemented to encourage or force nodes to contribute resources.

Some researchers have explored the benefits of enabling virtual communities to self-organize and introduce incentives for resource sharing and cooperation, arguing that the social aspect missing from today's P2P systems should be seen both as a goal and a means for self-organized virtual communities to be built and fostered Ongoing research efforts for designing effective incentive mechanisms in P2P systems, based on principles from game theory are beginning to take on a more psychological and information-processing direction

The BitTorrent protocol: In this animation, the colored bars beneath all of the 7 clients in the upper region above represent the file being shared, with each color representing an individual piece of the file. After the initial pieces transfer from the seed (large system at the bottom), the pieces are individually transferred from client to client. The original seeder only needs to send out one copy of the file for all the clients to receive a copy

The BitTorrent protocol: In this animation, the colored bars beneath all of the 7 clients in the upper region above represent the file being shared, with each color representing an individual piece of the file. After the initial pieces transfer from the seed (large system at the bottom), the pieces are individually transferred from client to client. The original seeder only needs to send out one copy of the file for all the clients to receive a copyApplications

Peer-to-peer networks underly numerous applications. The most commonly known application is file sharing, which popularized the technology.

Communications

Skype, an Internet telephony network, uses P2P technology. (Formerly)

Instant messaging systems and online chat networks

Content delivery

In P2P networks, clients provide resources as well as using them. This means that unlike client-server systems, the content serving capacity of peer-to-peer networks can actually increase as more users begin to access the content (especially with protocols such asBittorrent that require users to share). This property is one of the major advantages of using P2P networks because it makes the setup and running costs very small for the original content distributor

File-sharing networks

The use of peer-to-peer file-sharing software such asaMule (pictured here) is responsible for the bulk of P2P internet traffic

The use of peer-to-peer file-sharing software such asaMule (pictured here) is responsible for the bulk of P2P internet traffic

Many file sharing networks, such as gnutella, G2 and the eDonkey network popularized peer-to-peer technologies. From 2004 on, such networks form the largest contributor of network traffic on the Internet.

Peer-to-peer content services, e.g. caches for improved performance such as Correli Caches

Software publication and distribution (Linux, several games); via file sharing networks

Intellectual property law and illegal sharing

Peer-to-peer networking involves data transfer from one user to another without using an intermediate server. Companies developing P2P applications have been involved in numerous legal cases, primarily in the United States, primarily over issues surrounding copyrightlaw. Two major cases are Grokster vs RIAA and MGM Studios, Inc. v. Grokster, Ltd.. In both of the cases the file sharing technology was ruled to be legal as long as the developers had no ability to prevent the sharing of the copyrighted material

Network neutrality

Peer-to-peer applications present one of the core issues in the network neutrality controversy. Internet service providers (ISPs) have been known to throttle P2P file-sharing traffic due to its high-bandwidth usage. Compared to Web browsing, e-mail or many other uses of the internet, where data is only transferred in short intervals and relative small quantities, P2P file-sharing often consists of relatively heavy bandwidth usage due to ongoing file transfers and swarm/network coordination packets. In October 2007, Comcast, one of the largest broadband Internet providers in the USA, started blocking P2P applications such as BitTorrent. Their rationale was that P2P is mostly used to share illegal content, and their infrastructure is not designed for continuous, high-bandwidth traffic. Critics point out that P2P networking has legitimate uses, and that this is another way that large providers are trying to control use and content on the Internet, and direct people towards a client-server-based application architecture. The client-server model provides financial barriers-to-entry to small publishers and individuals, and can be less efficient for sharing large files. As a reaction to this bandwidth throttling, several P2P applications started implementing protocol obfuscation, such as the BitTorrent protocol encryption. Techniques for achieving "protocol obfuscation" involves removing otherwise easily identifiable properties of protocols, such as deterministic byte sequences and packet sizes, by making the data look as if it were random. The ISP's solution to the high bandwidth is P2P caching, where an ISP stores the part of files most accessed by P2P clients in order to save access to the Internet

Streaming media

- Streaming media. P2PTV and PDTP. Applications include TVUPlayer, Joost, CoolStreaming, Cybersky-TV, PPLive, LiveStation, Giraffic and Didiom.

- Spotify uses a peer-to-peer network along with streaming servers to stream music to its desktop music player.

- Peercasting for multicasting streams. See PeerCast, IceShare, FreeCast, Rawflow

- Pennsylvania State University, MIT and Simon Fraser University are carrying on a project called LionShare designed for facilitating file sharing among educational institutions globally

- Osiris (Serverless Portal System) allows its users to create anonymous and autonomous web portals distributed via P2P network

Other P2P applications[edit source | editbeta]

- Bitcoin is a peer-to-peer-based digital currency.

- The U.S. Department of Defense is conducting research on P2P networks as part of its modern network warfare strategy.[38] In May, 2003, Anthony Tether, then director of DARPA, testified that the U.S. military uses P2P networks.

- Wireless community network, Netsukuku

- Dalesa a peer-to-peer web cache for LANs (based on IP multicasting).

- Open Garden, connection sharing application that shares Internet access with other devices using Wi-Fi or Bluetooth.

- Research like the Chord project, the PAST storage utility, the P-Grid, and the CoopNet content distribution system.

- JXTA

Historical development

While P2P systems had previously been used in many application domains, the concept was popularized by file sharing systems such as Napster (originally released in 1999).The basic concept of peer-to-peer computing was envisioned in earlier software systems and networking discussions, reaching back to principles stated in the first Request for Comments, R

Tim Berners-Lee's vision for the World Wide Web was close to a P2P network in that it assumed each user of the web would be an active editor and contributor, creating and linking content to form an interlinked "web" of links. This contrasts to the broadcasting-like structure of the web as it has developed over the years.[41]

A distributed messaging system that is often likened as an early peer-to-peer architecture is the USENET network news system that is in principle a client–server model from the user or client perspective, when they read or post news articles. However, news servers communicate with one another as peers to propagate Usenet news articles over the entire group of network servers. The same consideration applies to SMTP email in the sense that the core email relaying network of Mail transfer agents has a peer-to-peer character, while the periphery of e-mail clients and their direct connections is strictly a client–server relationship

.

Sunday, August 11, 2013

Digital trends-Augmented Reality(AR)

What is Augmented Reality (AR): Augmented Reality Defined, iPhone Augmented Reality Apps and Games and More

This year, apps such as Nearest Tube for iPhone (which displays real-time pop-ups alerting users to nearby train stations in London) and Tweetmondo for Android smartphones (which shows the status updates of nearby Twitter fans), offer an early glimpse at how the technology works. Even the unlikeliest candidates such as the US Postal Service, A&E Network, and GE are beginning to show how augmented reality could help us interact with and understand digital content in more interesting ways. Knowing this, it’s not too farfetched to wager that in the not-too-distant future, augmented reality could actually become as integral to our lives as cell phones and Web 2.0 sites in terms of how it enhances reality and integrates with our surroundings.Of course, there are dangers involved. Relying too much on augmented reality could mean more than just driving into a lake when you follow poor GPS directions. Instead, following the prompts of a software program designed to make your life easier could lead to life-threatening disaster and a newform of hacking and identity theft. Given the tools to make augmented reality part of our lives, there is a potential for sensory overload, and for others to manipulate the everyday real-world feedback we take for granted. Still, in the right conditions, the technology could make our lives less complex and far easier.Part science fiction, part a reaction to today’s increasingly overwhelming constant barrage of digital content, one thing is for certain, though: Augmented reality is an important step on the road to making technology more understandable and useful.

.

What is Augmented Reality?

In 1990, Boeing researcher Tom Caudell first coined the term “augmented reality” to describe a digital display used by aircraft electricians that blended virtual graphics onto a physical reality. As for the computer science world’s definition of augmented reality (AR) though, it’s more detailed, but essentially the same: Augmented reality is the interaction of superimposed graphics, audio and other senseenhancements over a real-world environment that’s displayed in real-time

Augmented Reality Past, Present and Future: How It Impacts Our Lives

Can’t stop hearing about it in the news, but wondering what makes “augmented reality” (AR) – the concept of overlaying computerized information, digital pop-up windows and/or virtual reality (VR) displays over real-world scenes and imagery – so exciting? Allow us to paint a picture. Imagine. You walk up to an airport terminal and breeze past the airline check-in. Afterwards, a wireless chip inyour smartphone uses biometrics to verify your identity at a checkpoint, then a green arrow pops up and shows you the best path to the gate. When you get there, a blue circle shows you where to sit and helps you avoid the most common congestion points. You wait about five minutes until a soft chime tells you to get in line. The total time between drop-off and take-off: Just 20 minutes.In this near-future scenario, just one of many possible applications for the technology, the concept of augmented reality makes air travel more bearable. More than just a series of visual cues, the technology can even combine auditory sensors and other stimuli to make high-tech data part of your everyday life. Like robotics, there’s a visceral and physical representation of the underlying artificial intelligence involved. And with real-world implications that range from expediting everyday business travel to fueling potential military research, facilitating heightened responses in emergency scenarios and powering the world’s most immersive video games, augmented reality will forever change how we think about data and how we process information.“Augmented reality will ultimately become a part of everyday life,” explains Sam Bergen, an associate art director for digital innovation at the ad agency Ogilvy and Mather. “Kids will use it in school as a learning tool – imagine Google Earth with AR- or AR-enabled text books. Shoppers will use it to see what products will look like in their home. Consumers will use it to visually determine how to set up a computer. Architects and city planners will even use it to see how new construction will look, feel, and affect the area they are developing.”This year, apps such as Nearest Tube for iPhone (which displays real-time pop-ups alerting users to nearby train stations in London) and Tweetmondo for Android smartphones (which shows the status updates of nearby Twitter fans), offer an early glimpse at how the technology works. Even the unlikeliest candidates such as the US Postal Service, A&E Network, and GE are beginning to show how augmented reality could help us interact with and understand digital content in more interesting ways. Knowing this, it’s not too farfetched to wager that in the not-too-distant future, augmented reality could actually become as integral to our lives as cell phones and Web 2.0 sites in terms of how it enhances reality and integrates with our surroundings.Of course, there are dangers involved. Relying too much on augmented reality could mean more than just driving into a lake when you follow poor GPS directions. Instead, following the prompts of a software program designed to make your life easier could lead to life-threatening disaster and a newform of hacking and identity theft. Given the tools to make augmented reality part of our lives, there is a potential for sensory overload, and for others to manipulate the everyday real-world feedback we take for granted. Still, in the right conditions, the technology could make our lives less complex and far easier.Part science fiction, part a reaction to today’s increasingly overwhelming constant barrage of digital content, one thing is for certain, though: Augmented reality is an important step on the road to making technology more understandable and useful.

Interacting With Digital Objects

In many ways, AR is an attempt to meld digital content with physical objects. One of the best examples of this is the US Postal Service’s priority mail shipping simulator. At the site, you first see a digital representation of a shipping container. Then, you take the object you want to ship – say, a child’s toy – and hold it up to a webcam. The box overlays on the toy so you can see if the object will fit or if you need to use the next largest size. What’s amazing about this AR simulation, which went live this past summer, is that it offers immediate and clear benefits, helping demonstrate just one of the quickest upsides to be recognized by employing the technology.

Another example of augmented reality in motion: The A&E Television network created an augmented reality puzzle game to promote a magic show with Chris Angel. But as fun a concept as even these digital diversions seem, John Swords, who produced the AR portal, says the initial AR entertainment apps and games on the on iPhone and other smartphone platforms are just the beginning. Currently limited to simple overlays, the next phase of smartphone apps that employ augmented reality components will introduce actual interaction with digital objects.

“Most mobile apps, particularly on the iPhone where there is a software limitation, are using the GPS and compass to overlay data onto the video feed,” says Swords. “This will lead to the next point in the field’s evolution, which is to be able to directly manipulate objects in the video feed.”

Military AR Applications

Charles Gannon, a professor at Saint Bonaventure, a futurist, and a frequent military consultant for the Department of Homeland Security, says that augmented reality could become an important aid in combat as well. For example, an anti-terrorism team could use AR technology to show HUD pop-ups for dangerous toxins in the area that are not discernable with the naked eye, or to examine the sound of gunfire and identify which automatic weapon is being used and from what vantage point

“A SWAT team would have greater perception,” says Gannon. “AR would connect at an instinctual level, helping them determine whether to move in closer. It’s not just about more maps or more statistics,” which Gannon says can be a detriment in tense situations, rather, “it’s more about primal sensory data.” Equipped with AR technology in this scenario, police and military forces would have to think less about how to deploy or which tactics to use as augmented reality offers real-time, enhanced feedback on surroundings, allowing them to react faster to breaking development. For example: A moment’s glance could be enough to identify where a sound is coming from, with an overlay displayed on a visor helping identify the attackers making these noises’ possible locations or identifying assailants with color-coded warnings.

Gannon says another interesting use of AR has to do with representing physical objects that are not visible yet. For instance, in the airport terminal example cited above, this could consist of showing you a virtual picture of an airplane before it reaches the gate so you can see what type of aircraft it is and where you will be sitting. Often, we have a hard time understanding the absence of data, but augmented reality would readily fill in the gaps and help project future scenarios to give us a better understanding of developing variables and potential ways to react to them.

The Future of Augmented Reality

So where will all this innovation lead? Gannon says that in the next 10-20 years, augmented reality could become commonplace – but he also warns about the dangers of using AR technology just because it is available.

“Augmented reality is just another tool, and like all tools, we’ll need to match the problem with the right solution,” he says, giving the example of an incoming commuter flight and how he’d rather just get a text message and not have augmented reality even part of the equation.

In the end, experts agree on one thing, however. Augmented reality is certainly a major step toward the virtual world intersecting with the physical, enhancing our perception, and providing clues about underlying data that we would not normally understand. It’s up to tomorrow’s innovators, however, to make sense of the technology and find ways to really help make it compute

.

Subscribe to:

Posts (Atom)